An Assessment of Social Media Content Removal in France, Germany, and Sweden

- The over-removal of legal content on social media platforms raises concerns about the chilling effect on free expression and the potential suppression of legitimate discourse online.

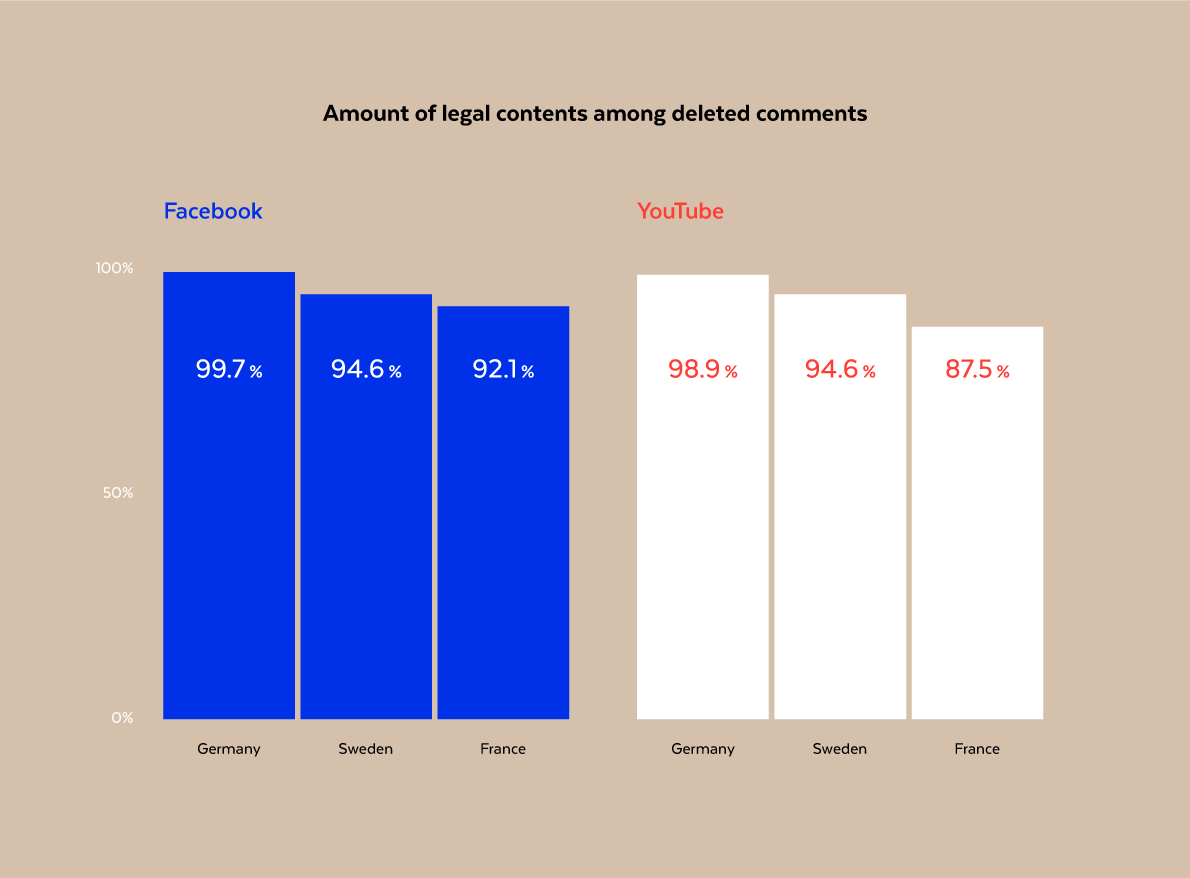

- This report found that a substantial majority (87.5% to 99.7%) of deleted comments on Facebook and YouTube in France, Germany, and Sweden were legally permissible, suggesting that platforms, pages, or channels may be over-removing content to avoid regulatory penalties.

- Only 25% of the examined pages or channels publicly disclosed specific content moderation practices, creating uncertainty for users about additional content rules beyond the general policies of platforms.

Executive Summary

Are recent Internet regulations effective in curbing the supposed “torrents” of hate speech on social media platforms, as some public officials claim? Or are these policies having the unintended consequence of platforms and users going overboard with moderation, thereby throwing out legal content with the proverbial bathwater?

The ubiquitous use of social media has no doubt added a complex dimension to discussions about the boundaries of free expression in the digital age. While the responsibility of content moderation on these platforms has fallen largely upon private entities, particularly the major tech companies, national and regional legislation across Europe has impacted their practices. For instance, in 2017, Germany enacted the Network Enforcement Act (NetzDG), which aimed to combat illegal online content such as defamation, incitement, and religious insults. In 2022, the European Union adopted a similar framework for policing illegal content online called the Digital Services Act (DSA).

This law will undoubtedly change the landscape for online speech as it goes into full effect. The DSA created a rulebook for online safety that imposes due process, transparency, and due diligence on social media companies. It was intended to create a “safe, predictable, and trusted online environment.” The underlying assumptions surrounding the passage of the DSA included fears that the Internet and social media platforms would become overrun with hate and illegal content. In 2020, leading EU Commissioner Thierry Breton asserted, “the Internet cannot remain a ‘Wild West’.” The DSA therefore sought to create “clear and transparent rules, a predictable environment and balanced rights and obligations.” In a similar vein, President Emmanuel Macron warned in 2018 about “torrents of hate coming over the Internet.”

But as our report seeks to demonstrate, these new rules are increasingly having real world regulatory and policy consequences while the potential scope of the DSA continues to broaden. In 2023, both Breton and Macron raised the possibility of using the DSA during periods of civil unrest to shut down social media platforms. Fortunately, this suggestion received a swift rebuke from civil society organizations, and EU backpedaling followed.

The rapid transformation of the DSA into a tool for broader regulations of Internet speech, including threats of wholesale shutdowns, necessitates a closer look at the underlying assumptions about online discourse. This report seeks to empirically test the validity of these strongly held convictions about the widespread proliferation of illegal hate speech on the Internet.

This report seeks to understand whether the assumptions underlying regulatory frameworks like NetzDG accurately reflect reality when it comes to the scale of illegal content online as well as the potential ramifications of the DSA. It examines how content moderation occurs on two major online platforms, Facebook and YouTube, analyzing the frequency of comment removals and the nature of the deleted comments. In a world that works the way policymakers intend, we would expect to find that most deleted comments constitute illegal speech.

To understand the nature of deleted comments in this study, the authors gathered comments from 60 of the largest Facebook pages and YouTube channels in France, Germany, and Sweden (20 in each country) and tracked which comments disappeared within a two-week period between June and July 2023. While not feasible to ascertain the actor responsible for deleting comments—the platform itself, the page or channel administrators, or the users—the report can determine the scope and content of the deleted comments on relevant Facebook pages and YouTube channels. Additionally, it is important to note how recent enforcement reports issued by Meta reveal a high percentage of proactive content moderation actions. Reports released for April through September of 2023 show that between 88.8% and 94.8% of content was ‘found and actioned’ by the company itself. Note that Meta defines “taking action” as including removal, covering of a photo or video with a warning or disabling accounts. In terms of YouTube, no relevant statistics are available for action taken on comments (only videos).

The collected comments were analyzed by legal experts to determine whether they were illegal based on the relevant laws in effect in each country. The non-legal deleted comments were coded into several categories, including general expressions of opinion, incomprehensible comments, spam, derogatory speech, and legal hate speech. While there was some overlap among the comment categories, it is important to note that our legal experts did not find, for instance, that all hate speech comments would be considered illegal in every country. Additionally, the report analyzes the specific content rules, or lack thereof, for all the pages under examination. These rules apply to content hosted by the pages or channels and complement Facebook’s and YouTube’s general content policies.

Key Findings

This analysis found that legal online speech made up most of the removed content from posts on Facebook and YouTube in France, Germany, and Sweden. Of the deleted comments examined across platforms and countries, between 87.5% and 99.7%, depending on the sample, were legally permissible.

The highest proportion of legally permissible deleted comments was observed in Germany, where 99.7% and 98.9% of deleted comments were found to be legal on Facebook and YouTube, respectively. This could reflect the impact of the German Network Enforcement Act (NetzDG) on the removal practices of social media platforms which may over-remove content with the objective of avoiding the legislation’s hefty fine. In comparison, the corresponding figures for Sweden are 94.6% for both Facebook and YouTube. France has the lowest percentage of legally permissible deleted comments, with 92.1% of the deleted comments in the French Facebook sample and 87.5% of the deleted comments French YouTube sample.

In other words, a substantial majority of the deleted comments investigated are legal, suggesting that – contrary to prevalent narratives – over removal of legal content may be a bigger problem than under removal of illegal content.

A further breakdown of the findings reflects that on the basis of 1,276,731 collected comments, of which 43,497 were deleted, the report draws the following key conclusions:

- YouTube experienced the highest deletion rates of all comments, with removal rates of 11.46%, 7.23%, and 4.07% in Germany, France, and Sweden, respectively. On Facebook, the corresponding percentages were substantially lower, at 0.58%, 1.19%, and 0.46%.

- Among the deleted comments, the majority were classified as “general expressions of opinion.” In other words, these were statements that did not contain linguistic attacks, hate speech or illegal content, such as expressing the support for a controversial candidate in the abstract. On average, more than 56% of the removed comments fall into this category.

- Out of all the deleted comments, the percentage of illegal comments fluctuates significantly among the pages and channels and countries. The highest proportion is found in France, where it accounts for 7.9% on Facebook pages and 12.5% on YouTube. In Germany, this fraction is markedly lower, with 0.3% on Facebook and 1.1% on YouTube. For investigated Swedish pages and channels, it stands at 5.4% for both Facebook and YouTube.

- The assessment reveals that only 25% of the examined pages or channels publicly disclose specific content moderation practices. This may generate uncertainty among users who may not be able to know whether specific content rules apply in addition to platforms’ general content policies.

Acknowledgments

The Future of Free Speech expresses its gratitude to August Vigen Smolarz and Eske Vinther-Jensen, both of Common Consultancy, for their work in conducting the statistical analysis that forms the basis of this report and co-drafting it as well as Edin Lind Ikanović and Tobias Bornakke from Analyse & Tal for developing a unique data-collection set-up that can identify deleted comments across several platforms. The Future of Free Speech is thankful to Ioanna Tourkochoriti (Baltimore University), Martin Fertmann (Leibniz-Institute for Media Research | Hans-Bredow-Institut), and Mikael Ruotsi (Uppsala University) for providing advice on the national legislation of France, Germany, and Sweden, respectively, and their suggestions during the drafting of the report.

About The Future of Free Speech

About Common Consultancy

About Analyse & Tal

Sponsors

The Future of Free Speech thanks the below institutions for all their support in the creation of this report: